Archive for the 'Hollywood: Artistic traditions' Category

The magic number 30, give or take 4

David here–

When I was teaching, students often came to my office hours with variants of this question. “I want to direct Hollywood movies. When should I start trying to get into the industry?”

My answer usually ran this way:

Your prospects are almost nil. It’s tremendously unlikely that you will become a professional director. You may wind up in some other branch of the industry, though. In any case, start now. Aim for summer internships while you’re in school, and try to get a job in LA immediately after graduation—preferably through a friend, a relative, a relative of a friend, or a friend of a relative.

My answer still seems reasonable, but recently I’ve begun to wonder if it holds up across history. Suppose we refocus our goggles on the macro scale. At what ages do most Hollywood directors make their first feature? If we can come up with a solid answer, it would be one instance in which studying film history can have very practical consequences.

No country for old men

In any field, most people would expect to have their careers moving along by the time they hit their early thirties. But aspiring filmmakers may think that there’s a long period of preparation–working in a mailroom, sweating over screenplays–before they finally get to direct. Directing films is a top-of-the-heap role. Who would trust a young first-timer with a multimillion-dollar investment and authority over far more experienced performers and technicians?

Nearly everybody, actually.

Executives tend to be oldish and not fully aware of what the target movie audience likes. A few years ago a friend of mine, consulting for the studios, went to a meeting of suits and was told that all the new digital sampling and mixing software was purely an effort to steal music. He replied that there were legitimate creative uses of that technology. “For example?” one exec asked. My friend said, “Well, there’s Moby.” Nobody at the table knew who Moby was.

So hiring young people in touch with their peers’ tastes makes sense. Indeed, many of today’s top filmmakers started making features quite young. I haven’t made an exhaustive survey, but picking directors more or less at random yields some pretty interesting results.

Note: It’s hard to know how long a movie took to make, so I’ll use the release date as my benchmark. With the exceptions noted at the end, I haven’t checked people’s birthdays against day and month release dates. As a result, the ages of directors that I’ll be giving fall within a year of the releases. That works well enough to establish an approximate age range for a general tendency. And since it takes at least a year to get a project finished and released, we have to remember that most of these people started work on their first feature quite a bit earlier than is indicated by the age I give.

Start with directors who broke through in the 1990s and 2000s. Many were about 30 when their first features came out: Keenen Ivory Wyans (above; I’m Gonna Get You Sucka), Michael Bay (Bad Boys), David Fincher (Alien 3), and Spike Jonze (Being John Malkovich). A tad older at their debuts were Alex Proyas at 31 (The Crow), Cameron Crowe at 32 (Say Anything), James Mangold at 32 (Heavy), Karyn Kusuma at 32 (Girlfight), McG at 32 (Charlie’s Angels), David Koepp at 33 (The Trigger Effect), Allison Anders at 33 (Border Radio), and Mark Neveldine at 33 (Crank).

Actually these are the older entrants. A great many current directors completed their first feature in their twenties. Quentin Tarantino, Reginald Hudlin, Roger Avary, and Joe Carnahan debuted at 29, Sofia Coppola and Brett Ratner at 28, Peter Jackson and F. Gary Gray at 26.

Go a little further past 33 and you’ll find a few big names like Alexander Payne (35), Nicole Holofcenter (36), Simon West (36), and Ang Lee (38). Almost no contemporary directors broke into the game past 40. The most striking case I found is Mimi Leder, who signed The Peacemaker when she was 45.

On the whole, the directors who start old have found fame and power playing other roles. When you’re David Mamet, you can tackle your first feature at 40. (He’s my age; don’t think this doesn’t make me depressed.) If you’re a producer like Bob Shaye or Jon Avnet, you can start directing in your fifties. Actors, especially stars, get a pass at any age. Mel Gibson made his first feature at 37, Steve Buscemi at 39, Barbra Streisand at 41, Anthony Hopkins at 59.

Putting aside such heavyweights, the lesson seems to be this. In today’s Hollywood, a novice director is likely to start making features between ages 26 and 34. It’s also very likely that he or she will have already done professional directing in commercials, music videos, or episodic TV, as many of my samples did.

But what about the Movie Brats?

Is today’s youth boom a new development? The Baby Boomers will yelp, “No! Everybody knows that we invented the director-prodigy.” Look:

*Dementia 13: Francis Ford Coppola, 24. If you don’t want to count that, You’re a Big Boy Now was released when Coppola was 27.

*Who’s That Knocking at My Door?: Martin Scorsese, 25.

*Dark Star: John Carpenter (above), 26.

*THX 1138: George Lucas, 27.

*Night of the Living Dead: George Romero, 28.

*The Sugarland Express: Steven Spielberg, 28. If you count the TV feature Duel, subtract 2 years.

*Targets: Peter Bogdanovich, 29.

*Dillinger: John Milius, 29.

*Blue Collar: Paul Schrader, 32.

*Good Times (with Sonny and Cher): William Friedkin, 32.

*Easy Rider: Dennis Hopper, 33.

True, the 70s also revved up veterans like Altman and Ashby, but it was the new generation, we’re always told, who made the difference. Hollywood was run by old men who had brought the studio system to the brink of ruin. The twentysomethings smashed the barricades and made a place for young filmmakers.

It might have seemed that way at the time, but the belief was probably due to a quirk of history. The 1960s and early 1970s were in one respect a unique period in the history of American cinema. During those years, the studios had the widest range of age and experience ever assembled. For the first time in history, it looked like a retirement home.

Look at it this way. In the 1960s, films were being made by directors who had started in the 1950s (e.g., Arthur Penn, Sidney Lumet), as you’d expect. But at the same time there were films by directors who had started in the 1940s: Fred Zinneman, Sam Fuller, Robert Aldrich, Anthony Mann, Nicholas Ray, Vincente Minnelli, Robert Rossen, Robert Wise, Mark Robson, and many others. Furthermore, some of the biggest names of the 1960s were directors who had started in the 1930s, like George Cukor, Carol Reed, George Stevens, Otto Preminger, and Joseph Mankiewicz. More remarkably, the 1960s saw the release of films by masters who had begun in the 1920s, notably Hitchcock, Hawks, Capra, and Wyler.

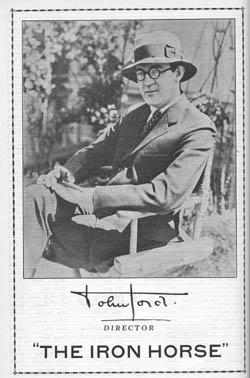

And believe it or not, several directors who had begun in the 1910s were still active nearly fifty years later. John Ford is the most obvious case, but Chaplin, Alan Dwan, Henry King, Fritz Lang, George Marshall, and Raoul Walsh signed films in the 1960s. When Sidney Lumet celebrates a half-century of directing with the upcoming release of Before the Devil Knows You’re Dead, he will be considered a rare bird in today’s cinema; but in a broader historical context he doesn’t stand alone.

Interestingly, the arrival of the Movie Brats and the blockbuster brought nearly all the veterans’ careers to a halt. No matter what decade an old-timer started, by the mid-1970s he was most likely dead, retired, or making flops. Perhaps the Movie Brats’ sense of Hollywood as a haunted house was created in part by the felt presence of six decades of history.

The studio years

But what about those codgers? At what age did they get started? A look at the evidence suggests that the rise of the twentysomething Movie Brats has plenty of precedents.

The Hollywood studios ran a sort of apprentice system. In the 1920s and 1930s, short comedies and dramas were the era’s equivalent of music videos and commercials—ephemeral material that allowed youngsters to learn their craft. For example, George Stevens started as a cameraman, and at age 26 graduated to directing short comedies. Three years later he made his first feature (The Cohens and Kellys in Trouble, 1933).

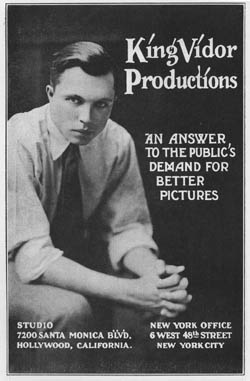

Stevens wasn’t atypical. In the 1920s and 1930s, major directors seem to have started as young as they do now. King Vidor began in features at 25, Rouben Mamoulian at 26. Mervyn LeRoy’s first feature was released when he was 27, the same age that Keaton, Capra, and Sam Taylor entered the field. Byron Haskin and W. S. Van Dyke made their debuts at 28. Hawks started at 30, as did Victor Fleming, Lewis Milestone, and Joseph H. Lewis. Clarence Brown signed his first solo feature at 31, the same age as Jules Dassin and George Cukor at their debuts.

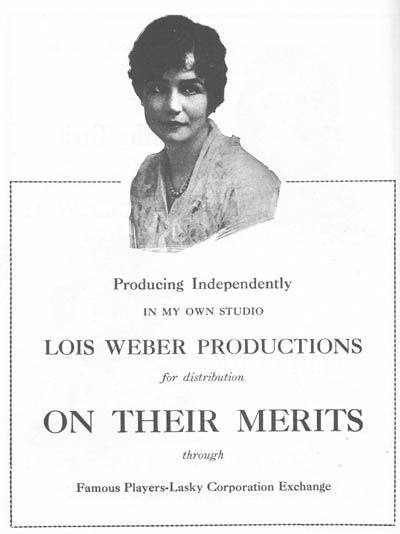

Even more remarkable is what went on during the 1910s. As Hollywood was becoming a film capital, it became a playground for twentysomethings. Lois Weber, Alan Dwan (who may have directed 400 movies), James Cruze, Reginald Barker, George Marshall, George Fitzmaurice, Raoul Walsh, and many others directed their first features before they were thirty. (At this point, a feature film could be around an hour. Kristin explains here.) Cecil B. DeMille was on the senior side, having directed The Squaw Man (1914) at age 33. Griffith was then even older of course, but having started in 1908, somewhat earlier than this generation, his authority waned as theirs rose. And he had started directing within my golden zone, at age 33.

Why so many youngsters? In the 1910s, the industry was growing rapidly and it needed a lot of labor. Few people went to college, so teenagers could take low-level jobs at the studios straight out of high school, or even without a secondary education. Moreover, Kristin reminds me that at this time life expectancy was much lower than today; in 1915 a white male on average would live only 53 years. Beginners hurried to get started, and bosses probably found comparatively few oldsters around.

At the other extreme, the World War II years seem to have been a tough time to break in. Anthony Mann, Nicholas Ray, Don Siegel, Richard Brooks, Robert Aldrich, Robert Rossen, and other gifted directors had to wait until their mid- to late thirties before taking the director’s chair in the second half of the 1940s. The 1950s somewhat put the premium on youth again with fresh blood, especially from the East Coast. The most famous instances are Stanley Kubrick (Hollywood feature debut at 28), John Frankenheimer (debut at 30), and John Cassavetes (Hollywood debut at 32).

In short, Hollywood has always favored fresh-faced novices. It’s not surprising. Young people will work exceptionally hard for little pay. They have reserves of energy to cope with a milieu dominated by deadlines, delays, long hours, and rough justice. They are more or less idealistic, which allows them to be exploited but which also infuses the creative process with some imagination and daring. The executives need wisdom, but the talent needs, as they say, a vision.

Some last questions

Of course in every era a few older hands start directing features comparatively late. Lloyd Bacon, auteur of the masterpiece Footlight Parade, didn’t make a feature (Broken Hearts of Hollywood, 1926) until he was thirty-seven, after dozens of shorts. Daniel Petrie was forty when his 1960 feature The Bramble Bush was released, and Julie Dash was forty when Daughters of the Dust came out. But you have to look hard to find careers starting this late. Most of the evidence I’ve seen indicates that first features skew young.

First question, then: Is the tendency to seize talent young unique to Hollywood, or is it a characteristic of other popular film industries? My hunch is that it has been a worldwide phenomenon. Eisenstein, who was 27 when he made Potemkin, was treated the oldest of his cohort of Soviet directors; at the other end of that scale were Gregori Kozintsev and Leonid Trauberg, who collaborated on their first film when they were 19! In Japan, Ozu, Mizoguchi, and Kurosawa started directing in their twenties. Michael Curtiz was 30 when he made his first feature, which happened to be Hungary’s first as well. The very name of the French New Wave was borrowed from a sociologist’s catchphrase for postwar twentysomethings. Today, in Europe or Asia, most beginning directors are in their mid-to-late twenties or early thirties. I suspect that everywhere the film industry gobbles up youth, both in front of and behind the camera.

Second, a question for further research. Hollywood hires a lot of first-timers, but how many of them build long-lasting careers? It’s sometimes said that it’s easier to get a first feature than a second.

Third, who’s the youngest director to make a Hollywood feature? The most famous candidate for the Early Admissions program is Orson Welles, who was 25 when Citizen Kane premiered. But on this score at least his old rival William Wyler had him beat. Wyler was 24 when he released his first feature. The fact that his mother was a cousin of Carl Laemmle, boss of Universal, doubtless helped him start near the top.

So is Wyler, at 24, the winner? No. John Ford, Ron Howard, and Harmony Korine were 23 when their first features opened. But wait: Leo McCarey was only 22 when his first feature (Society Secrets) was released in 1921. M. Knight Shyamalan’s first first feature, Praying with Anger, achieved a limited release in 1993, when he was 22.

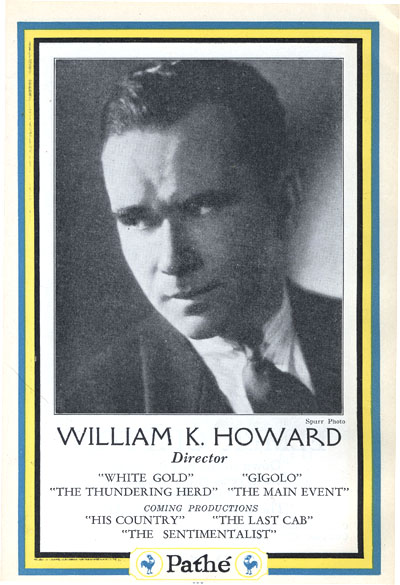

Yet I think that William K. Howard has the edge. Born on 16 June 1899, he signed two features in late 1921, when he was 22, putting him abreast of McCarey and Shyamalan. But Howard also co-directed a feature that was released on 22 May 1921—about three weeks before his twenty-second birthday. Brett and Sofia and PJ, you are so over the hill.

(Once we move out of the US, the clear winner would seem to be Hana Makhmalbaf. When she was ten, she served as AD for her sister Samira on The Apple, and she signed a documentary feature when she was fifteen. “I used to get excited over the words sound, camera, action. There was a strange power in these three words. That’s why I quit elementary school after second grade at age eight.”)

Finally, how does a little knowledge of film history help us steer hopefuls on the road to Hollywood? It gives us a sharper sense of timing and opportunity. My best revised answer would be this.

Start as young as you can, in any capacity. For directing music videos and commercials, the window opens around age 23. For features, the best you can hope for is to start in your late twenties. But the window closes too. If you haven’t directed a feature-length Hollywood picture by the time you’re 35, you probably never will.

Unless your last name is Bruckheimer, Bullock, Kidman, Cruise, or Bale.

PS 16 Sept 2007: Some corrections and updates: I’m not surprised that my more or less random sampling missed some things. So I’m happy to say that we have new contenders in the youth category.

Charles Coleman, Film Program Director at Facets in Chicago, pointed out that Matty Rich was only 19 when Straight Out of Brooklyn was released in 1991. So my candidate, William K. Howard, is second-best at best.

As for Hana Makhmalbaf, she’s been beaten too. Alert reader Ben Dooley wrote to tell me of Kishan Shrikanth, who was evidently ten years old at the release of his feature, Care of Footpath, a Kannada film featuring several Indian stars. Kishan, a movie actor from the age of four, has been dubbed the world’s youngest feature film director by the Guinness Book of World Records. You can read about him here, and this is an interview.

As for Hana Makhmalbaf, she’s been beaten too. Alert reader Ben Dooley wrote to tell me of Kishan Shrikanth, who was evidently ten years old at the release of his feature, Care of Footpath, a Kannada film featuring several Indian stars. Kishan, a movie actor from the age of four, has been dubbed the world’s youngest feature film director by the Guinness Book of World Records. You can read about him here, and this is an interview.

From Australia, critic Adrian Martin writes to point out that Alex Proyas made features before The Crow. “A clear case of Hollywood/ Americanist bias!! (Just kidding.) Proyas had an extensive career in Australia before the USA, and that includes his first, intriguing feature, Spirits of the Air, Gremlins of the Clouds, in 1989. Which puts him around 27 at the time!”

Finally (but what is final in a discussion like this?) over at Mark Mayerson’s informative animation blog, there’s a discussion about animation directors, who tend to be quite a bit older when they sign their first full-length effort. Mark’s reflections and the comments point up some differences between career opportunities in live-action and animated features.

PPS 27 December 2007: Malcolm Mays, currently 17 years old, is shooting a feature under studio auspices. Read the inspiring story here.

I broke everything new again

DB here:

Today some notes about cinematic storytelling in two trilogies, one long and one lasting only ninety seconds.

Extreme ways are back again

Yes, one more entry on The Bourne Ultimatum, which I thought I was done with. But alert reader Damian Arlyn sent me an email proposing an ingenious explanation for Ultimatum‘s repetition of a scene from The Bourne Supremacy. (Something like his idea is also suggested here and here.)

After yet another viewing, I agree: That scene is the same piece of plot action, replayed in a new context. This makes the film’s structure more interesting than I’d thought in my entries here and here.

Continue at the risk of spoilers.

At the climax of Supremacy, Jason kills Marie’s assassin in a traffic-clogged tunnel. He then visits the daughter of the Russian couple he murdered during his first CIA mission. The epilogue of Supremacy takes place in New York City. Jason calls Pamela Landy while he watches her in her office from an opposite building. Apparently as a token of thanks, Pam tells him his real name and adds his birthdate: “4/15/71.” Supremacy ends with him striding off with a shoulder bag and vanishing into the Manhattan crowd.

The phone conversation is replayed in Ultimatum. Bourne and Pam are in the same clothes, her office furniture is the same, what we hear of the dialogue is identical. But now the sinister Deputy Director Noah Vosen is monitoring the call, so at moments we see the scene refracted through his and his technicians’ eavesdropping. Just as important, Pam’s report of Jason’s birthdate turns out to be inaccurate. She has given him a coded reference: the building where he received his initiation into the assassin’s life is 415 71st Street. He heads there to confront Dr. Albert Hirsch, his Svengali. The script has played fair with us on this: We’ve had opportunities to glimpse Bourne’s real birthdate (9/13/70) on the paperwork Pam flicks through in an earlier scene.

You could argue that this replay dilutes the emotional force of the Supremacy scene. Instead of giving Jason back his identity, a gesture of emotional bonding, Pam presents yet another clue of the sort that has linked Bourne’s quest throughout the film. Feeling, one might say, has been replaced by espionage-movie plot mechanics. But I think that the device is more interesting than that. For one thing, if you’ve seen the first film, there will still be the lingering memory of your sense of Pam’s sincerity and Jason’s gratitude. Just as intriguing are the structural consequences.

If we imagine the second and third installments in the franchise as presenting one long story, the original phone conversation in Supremacy comes after most of the events we see in Ultimatum. Ultimatum begins with Bourne in Moscow, limping as he was upon leaving the daughter’s apartment and still spattered with blood from the tunnel chase. That is, the prologue of this film carries on continuously from the end of the second film’s climax, but long before its epilogue in New York.

After a title announcing, “Six Weeks Later,” Bourne’s European globetrotting in Ultimatum begins. Meanwhile, back at the CIA Pam explains that Jason had gone to Moscow to find the daughter. The bulk of the film carries him to Paris, London, Madrid, and Tangier, all the while trailed by the agency. The final clue brings Bourne to Manhattan. Only then does he visit the building opposite the CIA station and make the call we’ve already, and incompletely, seen at the close of the second film. This new version of the scene initiates the final section of Supremacy, as Bourne sends the agents on a false trail, breaks into Noah’s office to grab incriminating documents (which he tucks into that shoulder bag), and makes his way to 71st Street and his fateful confrontation with Hirsch.

So the two films mesh intriguingly. Most of film 3 takes place just before the end of film 2, making the epilogue of 2 launch the climax of 3. This is a step beyond the more common sort of flashback to founding events in the story chronology, the tactic used at the start of Indiana Jones and the Last Crusade and The Lord of the Rings: The Return of the King. In these films, the jump back in time is more obviously signposted than in the Bourne movies.

The screenwriters Tony Gilroy, Scott Z. Burns, George Nolfi, and Tom Stoppard (uncredited) have made a clever and welcome innovation that I didn’t appreciate at first. I’m happy to finally understand it, though, because it fits into a trend I discussed in The Way Hollywood Tells It and an earlier entry. Some filmmakers today create looping, back-and-fill story lines that tease us into watching the movie over and over on DVD, à la Memento and Donnie Darko. There are still some Bourne questions lingering in my mind, so I guess Universal can sign me up as a DVD customer. . . sigh.

I’ve seen so much in so many places

Speaking of The Way, there I also noted the growth in contemporary cinema of what I call network narratives, or what Variety calls criss-crossers. These are films with several protagonists connected by friendship, kinship, or accident. The characters pursue different goals but as the film develops their actions affect one another. The classic examples are Nashville, Short Cuts, and Magnolia, but in writing about this strategy for my collection Poetics of Cinema, I found over a hundred such movies.

This week I noticed that a trio of TV commercials from Liberty Mutual Insurance seems to be influenced by this format. Each of these “Responsibility” ads compresses network principles into thirty seconds, while also adding a Twilight-Zoneish time warp. You can watch the ads here.

Each commercial begins by showing a woman and a pizza-delivery kid at a street corner. She thrusts out her arm to keep him from striding into the path of a car.

Somebody sees the woman’s act of kindness, and then that person is shown doing something kind. Someone else sees the second person and then is shown being kind, and so on. For example, in one variant a man across the street notices the woman and the delivery boy, and then he helps a lady with a baby get off a bus. He is watched by a man at the bus stop, who then is shown helping someone else. Like a benign contagion, solicitude spreads from person to person.

So we have a very slight set of connections: A is seen by B who is seen by C and so on. This patterning seems comparable to the shifting plotlines and viewpoints we find in some network films, with each character connected by accident and spatial proximity. The framings orient us by overlapping bits of space; the pizza boy’s red jacket gives us a landmark in the foreground, as does the bus in the later shot.

More interestingly, all three ads start with the same situation, the woman and the pizza boy, but they move on to different B’s, C’s, etc. In the variant I just mentioned, the man watching is sitting across the street. In another one, the woman’s act of responsibility is observed by the biker behind her, and he becomes B in a new chain of connections, setting out a traffic cone to warn drivers.

The biker is visible in the first shot of the other ads (see above), but there he plays no role. In the third ad, a man watching from above sees the pizza-boy mishap. (His point-of-view shot is at the top of this post.)

So, as with Jason’s and Pam’s conversation in Bourne, we have a narrative node or pivot that is contextualized differently in another film. What allows this to work, I suggest, is a piece of our social intelligence–our understanding that chance can connect people—plus our cultural experience with storytelling, particularly network narratives. The oddest twist, though, comes at the end of each ad, which like the Bourne trilogy offers an interesting repetition.

Each string of actions ends with the woman we saw at the beginning observing someone else’s act of kindness. For example, in one ad she sees a man at an airport baggage conveyor help an old man snag his bag.

And then once more we see her halt the pizza-delivery kid. So each ad gives us a network something like this: A-B-C-D-…-A. The action moves in a circle, back to the woman as a witness, and then back to her at the pedestrian crossing, once more protecting the delivery boy.

Huh? The ending of the ad suggests that by seeing another person acting responsibly, the woman is more inclined to warn the boy. But that act, with the same boy, was what set off the chain of charity in the first place. In strictly realistic terms, we’ve got a sort of möbius strip (no, not a Moby strip), where the head is glued to the tail and the whole cycle is in a twist.

Well, consider the filmmakers’ problem. How could you end it otherwise? The cascade of charity could go on indefinitely, which is not a plausible scenario for a thirty-second spot. Instead, the swallowing-the-tail tactic brings the pattern to a satisfying conclusion, while providing an event that can be the jumping-off point for another cycle in another ad. The last shot of any of the ads is the first shot of any of the others. You can enjoy the whole cycle as a mini-movie that jumps back in time to the original act of kindness at the corner.

Of course the pattern isn’t meant to be realistic. The message is that small acts can make a difference, and that Liberty Mutual tries to live up to an ideal of responsibility in its business. The themes are presented in an oblique, rather poetic story that suggests that kindness is catching and that even as strangers we are bound to help one another. Significantly, the act that’s presented through multiple replays is the most consequential: the woman is in effect saving the pizza boy’s life.

Both the Bourne movies and “Responsibility” show that studying film narrative is endlessly interesting, and storytellers can be quite creative. There’s always room in life for this.

Legacies

DB here:

By now James Mangold’s 3:10 to Yuma has had its critics’ screenings and sneak previews. It’s due to open this weekend. The reviews are already in, and they’re very admiring.

Back in June, I saw an early version as a guest of the filmmaker and I visited a mixing session, which I chronicled in another entry. I was reluctant to write about the film in the sort of detail I like, because I wasn’t seeing the absolutely final version and because it would involve giving away a lot of the plot.

I still won’t offer you an orthodox review of the film, which I look forward to seeing this weekend in its final theatrical form. Instead, I’ll use the film’s release as an occasion to reflect on Mangold’s work, on his approach to filmmaking, and on some general issues about contemporary Hollywood.

An intimidating legacy

It seems to me that one problem facing contemporary American filmmakers is their overwhelming awareness of the legacy of the classic studio era. They suffer from belatedness. In The Way Hollywood Tells It, I argue that this is a relatively recent development, and it offers an important clue to why today’s ambitious US cinema looks and sounds as it does.

In the classic years, there was asymmetrical information among film professionals. Filmmakers outside the US were very aware of Hollywood cinema because of the industry’s global reach. French and German filmmakers could easily watch what American cinema was up to. Soviet filmmakers studied Hollywood imports, as did Japanese directors and screenwriters. Ozu knew the work of Chaplin, Lubitsch, and Lloyd, and he greatly admired John Ford. But US filmmakers were largely ignorant of or indifferent to foreign cinemas. True, a handful of influential films like Variety (1925) and Potemkin (1925) made an impact on Hollywood, but with the coming of sound and World War II, Hollywood filmmakers were cut off from foreign influences almost completely. I doubt that Lubitsch or Ford ever heard of Ozu.

Moreover, US directors didn’t have access to their own tradition. Before television and video, it was very difficult to see old American films anywhere. A few revival houses might play older titles, but even the Museum of Modern Art didn’t afford aspiring film directors a chance to immerse themselves in the Hollywood tradition. Orson Welles was considered unusual when he prepared for Citizen Kane by studying Stagecoach. Did Ford or Curtiz or Minnelli even rewatch their own films?

With no broad or consistent access to their own film heritage, American directors from the 1920s to the 1960s relied on their ingrained craft habits. What they took from others was so thoroughly assimilated, so deep in their bones, that it posed no problems of rivalry or influence. The homage or pastiche was largely unknown. It took a rare director like Preston Sturges to pay somewhat caustic respects to Hollywood’s past by casting Harold Lloyd in Mad Wednesday (1947), which begins with a clip from The Freshman. (So Soderbergh’s use of Poor Cow in The Limey has at least one predecessor.)

Things changed for directors who grew up in the 1950s and 1960s. Studios sold their back libraries to TV, and so from 1954 on, you could see classics in syndication and network broadcast. New Yorkers could steep themselves in classic films on WOR’s Million-Dollar Movie, which sometimes ran the same title five nights in a row. There were also campus film societies and in the major cities a few repertory cinemas. The Scorsese generation grew up feeding at this banquet table of classic cinema.

In the following years, cable television, videocassettes, and eventually DVDs made even more of the American cinema’s heritage easily available. We take it for granted that we can sit down and gorge ourselves on Astaire-Rogers musicals or B-horror movies whenever we want. Granted, significant areas of film history are still terra incognita, and silent cinema, documentary, and the avant-garde are poorly served on home video. Nonetheless, we can explore Hollywood’s genres, styles, periods, and filmmakers’ work more thoroughly than ever before. Since the 1970s, the young American filmmaker faces a new sort of challenge: Now fully aware of a great tradition, how can one keep from being awed and paralyzed by it? How can a filmmaker do something original?

I don’t suggest that filmmakers have decided their course with cold calculation. More likely, their temperaments and circumstances will spontaneously push them in several different directions. Some directors, notably Peckinpah and Altman, tried to criticize the Hollywood tradition. Most tried simply to sustain it, playing by the rules but updating the look and feel to contemporary tastes. This is what we find in today’s romantic comedy, teenage comedy, horror film, action picture, and other programmers.

More ambitious filmmakers have tried to extend and deepen the tradition. The chief example I offer in The Way is Cameron Crowe’s Jerry Maguire, but this strategy also informs The Godfather, American Graffiti, Jaws, and many other movies we value. I also think that new story formats like network narratives and richly-realized story worlds have become creative extensions of the possibilities latent in classical filmmaking.

James Mangold’s career, from Heavy onward, follows this option, though with little fanfare. He avoids knowingness. He doesn’t fill his movies with in-jokes, citations, or homages. Instead, he shows the continuity between one vein of classical cinema and one strength of indie film by concentrating on character development and nuances of performance. In an industry that demands one-liners and catch-phrases sprinkled through a script, Mangold offers the mature appeal of writing grounded in psychological revelation. In a cinema that valorizes the one-sheet and special effects and directorial flourishes, he begins by collaborating with his actors. He is, we might say, following in the steps of Elia Kazan and George Cukor.

Sandy

By his own account, Mangold awakened to these strengths during his formative years at Cal Arts, where he studied with Alexander Mackendrick. Mackendrick directed some of the best British films of the 1940s and 1950s, but today he is best known for Sweet Smell of Success.

Seeing it again, I was struck by the ways that it looks toward today’s independent cinema. It offers a stinging portrait of what we’d now call infotainment, showing how a venal publicist curries favor with a monstrously powerful gossip columnist. It’s not hard to recognize a Broadway version of our own mediascape, in which Larry King, Oprah, and tmz.com anoint celebrities and publicists besiege them for airtime. While at times the dialogue gets a little didactic (Clifford Odets did the screenplay), the plotting is superb. Across a night, a day, and another night, intricate schemes of humiliation and aggression play out in machine-gun talk and dizzying mind games. It’s like a Ben Jonson play updated to Times Square, where greed and malice have swollen to grandiose proportions, and shysters run their spite and bravado on sheer cutthroat adrenalin.

Sweet Smell was shot in Manhattan, and James Wong Howe innovated with his voluptuous location cinematography. “I love this dirty city,” one character says, and Wong Howe makes us love it too, especially at night.

Today major stars use indie projects to break with their official personas, and the same thing happens in Mackendrick’s film. Burt Lancaster, who had played flawed but honorable heroes, portrayed the columnist J. J. Hunsecker with savage relish. “My right hand hasn’t seen my left hand in thirty years,” he remarks. Tony Curtis, who would go on to become a fine light comedian, plays Sidney Falco as a baby-faced predator, what Hunsecker calls “a cookie full of arsenic.” Falco is sunk in petty corruption, ready to trade his girlfriend’s sexual favors for a notice in a hack’s column. Hunsecker and Falco, host and parasite, dominator and instrument, are inherently at odds, then in a breathtaking scene they double-team to break the will of a decent young couple. There are no heroes. Nor, as Mangold points out in his Afterword to the published screenplay, do we find any of that “redemption” that today’s producers demand in order to brighten a bleak story line.

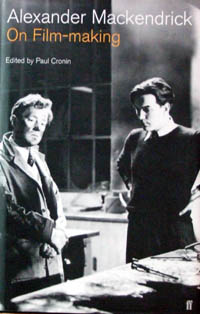

Mackendrick must have been a wonderful teacher. On Film-Making, Paul Cronin’s published collection of his course notes, sketches, and handouts, forms one of our finest records of a director’s conception of his art and craft. Offering a sharp idea on every page, the book should sit on the same shelf with Nizhny’s Lessons with Eisenstein.

Mackendrick must have been a wonderful teacher. On Film-Making, Paul Cronin’s published collection of his course notes, sketches, and handouts, forms one of our finest records of a director’s conception of his art and craft. Offering a sharp idea on every page, the book should sit on the same shelf with Nizhny’s Lessons with Eisenstein.

Mangold became a willing apprentice to Mackendrick. “He taught me more craft than I could articulate, but beyond that, he showed me how hard one had to work to make even a decent film.” (1) Mangold’s afterword to the Sweet Smell screenplay offers a precise dissection of the first twenty minutes. He shows how the script and Mackendrick’s direction prepare us for Hunsecker’s entrance with the utmost economy. Mangold traces out how five of Mackendrick’s dramaturgical rules, such as “A character who is intelligent and dramatically interesting THINKS AHEAD,” are obeyed in the film’s first few minutes. The result is a taut character-driven drama, operating securely within Hollywood construction while opening up a sewer in a very un-Hollywood way. Surely Sandy Mackendrick’s boldness helped Mangold find his own way among the choices available to his generation.

Movies for grownups

Mangold has often mentioned his love for classic Westerns; on the DVD commentary for Cop Land he admits that he wanted it to blend the western with the modern crime film. But why Delmer Daves’ 3:10 to Yuma?

The Searchers, Rio Bravo, and The Man Who Shot Liberty Valance were objects of veneration for directors of the Bogdanovich/ Scorsese/ Walter Hill generation, while Yuma seemed to be a more run-of-the-mill programmer. To American critics in the grips of the auteur theory, Delmer Daves sat low in the canon, and Glenn Ford and Van Heflin offered little of the star wattage yielded by John Wayne, James Stewart, and Dean Martin. In retrospect, though, I think that you can see what led Mangold to admire the movie.

For one thing, it’s a close-quarters personal drama. It doesn’t try for the mythic resonance of Shane or The Searchers or Liberty Valance, and it doesn’t relax into male camaraderie as Rio Bravo does. The early Yuma simply pits two sharply different men against one another. Ben Wade is a robber and killer who is so self-assured that he can afford to be courteous and gentle on nearly every occasion. Dan Evans is a farmer so beaten down by bad weather, bad luck, and loss of faith in himself that he takes the job of escorting the killer to the train depot.

That dark glower that made Glenn Ford perfect for Gilda and The Big Heat slips easily into the soft-spoken arrogance of Wade. His self-assurance makes it easy for him to play on all of Evans’ doubts and anxieties. In counterpoint, Van Heflin gives us a man wracked by inadequacy and desperation; his flashes of aggression only betray his fears. In the end, his courage is born not of self-confidence but of sheer doggedness and a dose of aggrieved envy. He has taken a job, he needs the money to provide for his family, and, at bottom, it’s not right that a man like Wade should flourish while Evans and his kind scrape by. As in Sweet Smell of Success, the drama is chiefly psychological rather than physical, and the protagonist is far from perfect.

Daves shows the struggle without cinematic flourishes, in staging and shooting as terse as the prose in Elmore Leonard’s original story. Deep-focus images and dynamic compositions maintain psychological pressure, and as ever in Hollywood films, undercurrents are traced in postures and looks, as when Wade in effect takes over Evans’ role as father at the dinner table.

One more factor, a more subliminal one, may have attracted Mangold to Daves’ western. 3:10 to Yuma was released in 1957, about two months after Sweet Smell of Success. Both belong to a broader trend toward self-consciously mature drama in American movies. Faced with dwindling audiences, more filmmakers were taking chances, embracing independent and/or East Coast production, and offering an alternative to teenpix and all-family fare. 1957 is the year of Bachelor Party, Twelve Angry Men, Edge of the City, A Face in the Crowd, The Garment Jungle, A Hatful of Rain, The Joker Is Wild, Mister Cory (also with Tony Curtis), No Down Payment, Pal Joey, Paths of Glory, Peyton Place, Run of the Arrow, The Strange One, The Tarnished Angels, The Three Faces of Eve, Twelve Angry Men, and The Wayward Bus. These films and others featured loose women, heroes who are heels, and “adult themes” like racial prejudice, rape, drug addiction, prostitution, militarism, political corruption, suburban anomie, and media hucksterism. (Who says the 1950s were an era of cozy Republican values and Leave It to Beaver morality?) Today many of these films look strained and overbearing, but they created a climate that could accept the doggedly unheroic Evans and the proudly antiheroic Sidney Falco.

3:10 x 2

Mangold’s remake is a re-imagining of Daves’ film, more raw and unsparing. Both films give us the journey to town and a period of waiting in a hotel room. In the original, Daves treats the sequences in the hotel room as a chamber play. Each of Wade’s feints and thrusts gets a rise out of Evans until they’re finally forced onto the street to meet Wade’s gang and the train. Mangold has instead expanded the journey to the railway station, fleshing out the characters (especially Wade’s psychotic sidekick) and introducing new ones. This leaves less space for the hotel room scenes, which are I think the heart of Daves’ film.

At the level of imagery, the new version is firmly contemporary. The west is granitic; men are grizzled and weatherbeaten. When Wade and Evans get into the hotel room, Daves’ low-angle deep-focus compositions are replaced by the sort of brief singles that are common today. As in all Mangold’s work, minute shifts in eye behavior deepen the implications of the dialogue.

Similarly, the cutting, the speediest of any Mangold film, is in tune with contemporary pacing. At an average of 3 seconds per shot, it moves at twice the tempo of Daves’ original. Mangold has been reluctant to define himself by a self-conscious pictorial style. “I think writer-directors have less of a need sometimes to put a kind of obvious visual signature over and over again on their movies . . . . I don’t need to go through this kind of conscious effort to become the director who only uses 500mm lenses.” (2)

Mangold puts his trust in well-carpentered drama and nuanced performances. Daves begins his Yuma with Wade’s holdup of a stage, linking his movie to hallowed Western conventions, but Mangold anchors his drama in the family. His film begins and ends with Evans’ son Bill, who’s first shown reading a dime novel. We soon see his father, already tense and hollowed-out. At the end Mangold gives us a resolution that is more plausible than that of Leonard’s original or Daves’ version. What the new version loses by letting Evans’ wife Alice drop out of the plot it gains by shifting the dramatic weight to Bill in the final moments. The conclusion is drastically altered from the original, and it shocked me. But given Mangold’s admiration for the shadowlands of Sweet Smell, it makes sense.

In an interview Mangold remarks that Mackendrick distrusted film school because it stressed camera technique and didn’t prepare directors to work with actors. “It’s a giant distraction in film schools that in a way, by avoiding the world of the actor, young filmmakers are avoiding the most central relationship of their lives in the workplace.” (3) As we’d expect from Mangold’s other films, the central performances teem with details. Bale cuts up Crowe’s beefsteak, and Crowe gestures delicately with his manacles when he softly demands that the fat be trimmed. Bale’s gaunt, limping Evans, a Civil War casualty, becomes an almost spectral presence; his burning glances reveal a man oddly propelled into bravery by his failures. Crowe’s Ben Wade, for all his intelligence and jauntiness, is oddly unnerved by this farmer’s haunted demeanor. Actors and students of performance will be kept busy for years studying the two films and the way they illustrate different conceptions of the characters and the changes within mainstream cinema.

Sometimes I think that Hollywood’s motto is Tell simple stories with complex emotions. The classic studio tradition found elemental situations and used film technique and great performers to make sure that the plot was always clear. Yet this simplicity harbored a turbulent mixture of contrasting feelings, different registers and resonances, motifs invested with associations, sudden shifts between sentiment and humor. In his commitment to vivid storytelling and psychological nuances, Mangold has found a vigorous way to keep this tradition alive. Sandy would have been proud.

(1) James Mangold, “Afterword,” Clifford Odets and Ernest Lehman, Sweet Smell of Success (London: Faber, 1998), 165.

(2) Quoted in Stephan Littger, The Director’s Cut: Picturing Hollywood in the 21st Century (New York: Continuum, 2006), 317.

(3) Quoted in Littger, 313.

PS 9 Sept: Susan King’s article in the Los Angeles Times explains that Mangold first got acquainted with Daves’ film in Mackendrick’s class. “We would break down the dramatic structure of the film. This one really got under my skin, partly because it always really moved me. It also felt original in scope in that it was very claustrophobic and character-based.” King’s article gives valuable background on the difficulties of getting the film produced.

[insert your favorite Bourne pun here]

DB here:

You may be tired of hearing about The Bourne Ultimatum, but the world isn’t.

It’s leading in the international market ($52 million as of 29 August) and has yet to open in thirty territories. It’s expected, as per this Variety story, to surpass the overseas total of $112 for the previous installment, The Bourne Supremacy. According to boxofficemojo.com, worldwide theatrical grosses for the trilogy are at $721 million and counting. Add in DVD and other ancillaries, and we have what’s likely to be a $2-$3 billion franchise.

There’s every reason to believe that the success of the series, plus the critical buzz surrounding the third installment, will encourage others to imitate Paul Greengrass’s run-and-gun style. In an earlier blog, I tried to show that despite Greengrass’s claims and those of critics:

(1) The style isn’t original or unique. It’s a familiar approach to filmmaking on display in many theatrical releases and in plenty of television. The run-and-gun look is one option within today’s dominant Hollywood style, intensified continuity.

(2) The style achieves its effect through particular techniques, chiefly camerawork, editing, and sound.

(3) The style isn’t best justified as being a reflection of Jason Bourne’s momentary mental states (desperation, panic) or his longer-term mental state (amnesia).

(4) In this case the style achieves a visceral impact, but at the cost of coherence and spatial orientation. It may also serve to hide plot holes and make preposterous stunts seem less so.

I got so many emails and Web responses, both pro and con, that I began to worry. Did I do Ultimatum an injustice? So I decided to look into things a little more. I rewatched The Bourne Identity, directed by Doug Liman, and Greengrass’s The Bourne Supremacy on DVD and rewatched Ultimatum in my local multiplex. My opinions have remained unchanged, but that’s not a good reason to write this followup. I found that looking at all three films together taught me new things and let me nuance some earlier ideas. What follows is the result.

For the record: I never said that I got dizzy or nauseated. The blog entry did, however, try to speculate on why Greengrass’s choices made some viewers feel queasy. Some of those unfortunates registered their experiences at Roger Ebert’s site. For further info, check Jim Emerson’s update on one guy who became an unwilling receptacle.

Another disclaimer: For any movie, I prefer to sit close to the screen. In many theatres, that means the front row, but in today’s multiplexes sitting in the front row forces me to tip back my head for 134 minutes. So then I prefer the third or fourth row, center. From such vantages I’ve watched recent shakycam classics like Breaking the Waves and Dogville, as well as lesser-known handheld items like Julie Delpy’s Looking for Jimmy. My first viewing of Ultimatum was from the fifth row, my second from the third. (1)

Finally: There are spoilers ahead, pertaining to all three films.

How original?

Someone who didn’t like the films would claim that they’re almost comically clichéd. We get titles announcing “Moscow, Russia” and “Paris, France.” You can poke fun at lines of dialogue like “What connects the dots?” and “You better get yourself a good lawyer” and the old standby “What are you doing here?” But these are easy to forgive. Good films can have clunky dialogue, and you don’t expect Oscar Wilde backchat in an action movie.

More significantly, all three films are quite conventionally plotted. We have our old friend the amnesiac hero who must search for his identity. Neatly, the word bourne means a goal or destination. It also means a boundary, such as the line between two fields of crops–just as our hero is caught between everyday civil law and the extralegal machinery of espionage.

The film’s plot consists of a series of steps in the hero’s quest. Each major chunk yields a clue, usually a physical token, that leads to the next step. Add in blocking figures to create delays, a hierarchy of villains (from swarthy and unshaven snipers to jowly, white-haired bureaucrats), a few helpers (principally Nicky and Pam), and a string of deadlines, and you have the ingredients of each film. (Elsewhere on this site I talk about action plotting.) The last two entries in the series are pretty dour pictures as well; the loss of Franka Potente eliminates the occasional light touch that enlivened Bourne Identity right up to her nifty final line

All three films rely on crosscutting Bourne’s quest with the CIA’s efforts to find him, usually just one step behind. Within that structure most scenes feature stalking, pursuits, and fights. In the context of film history, this reliance on crosscutting and chases is a very old strategy, going back to the 1910s; it yields one of the most venerable pleasures of cinematic storytelling.

Mixed into the films is another long-standing device, the protagonist plagued by a nagging suppressed memory. Developing in tandem with the external action, the fragmentary flashbacks tease us and him until at a climactic moment we learn the source: in Supremacy, Bourne’s murder of a Russian couple; in Ultimatum, his first kill and his recognition of how he came to be an assassin. The device of gradually filling in the central trauma goes back to film noir, I think, and provides the central mysteries in Hitchcock’s Spellbound and Marnie.

By suggesting that the films are conventional, I don’t mean to insult them. I agree with David Koepp, screenwriter of Jurassic Park and Carlito’s Way, who remarks:

There are rules and expectations of each genre, which is nice because you can go in and consciously meet them, or upend them, and we like it either way. Upend our expectations and we love it—though it’s harder—or meet them and we’re cool with it because that’s all we really wanted that night at the movies anyway. (2)

There can be genuine fun in seeing the conventions replayed once more.

The series makes some original use of recurring motifs, the most obvious being water. The first shot of the first film shows Jason floating underwater; in the second film he bids farewell to the drowned Marie underwater; and in the last shot of no. 3, we see him submerged in the East River, closing the loop, as if the entire series were ready to start again. There’s also a weird parallel-universe moment when Pam tells Jason his real name and birthdate. She does it in 2, and then, as if she hasn’t done it before, tells him again in 3, in what seem to be exactly the same words. In 3, though, it has a fresh significance as a coded message, and a new corps of CIA staff is listening in, so probably the second occurrence is a deliberate harking-back. Or maybe Bourne’s amnesia has set in again. [Note of 9 Sept: Oops! Something that’s going on here is more interesting than I thought. See this later entry.]

The look of the movies

The big arguments about Ultimatum center on visual style. The filmmakers and some critics, notably Anne Thompson, have presented the style as a breakthrough. But again, the movie is more traditional than it might first appear.

In a film of physical action, the audience needs to be firmly oriented to the space and the people present. The usual tactic is to present transitional shots showing people entering or leaving the arena of action. What might be filler material in a comedy or drama—characters driving up, getting out of their vehicles, striding along the pavement, entering the building—is necessary groundwork in an action sequence. So we see Bourne arriving on the scene, then his adversaries arriving and deploying themselves, in the alternation pattern typical of crosscutting. Surprisingly, for all the claims made about the originality of Greengrass’s style in 2 and 3, he is careful to follow tradition and give us lots of shots of people coming and going, setting up the arena of confrontation.

Then the director’s job is just beginning. Traditionally, once the scene gets going, the positions and movement of the figures in the action arena should be clearly maintained. Likewise, it’s considered sturdy craftsmanship to delineate the overall space of the scene and quietly prepare the audience for key areas—possible exits, hiding places, relations among landmarks. (See this entry for how one modern director primes spaces in an action scene.) I claimed in the earlier entry that such basic orienting tasks aren’t handled cogently in Ultimatum, and a second viewing of the scene in Waterloo Station and the chase across the Tangier rooftops hasn’t made me change that opinion. Later I’ll discuss how Greengrass and some commentators have defended the loss of orientation in such scenes.

All the films in the trilogy employ traditional strategies of setting up the action sequences, but the series displays an interesting stylistic progression. In Identity, Liman gives us mostly stable framings and reserves handheld bits for moments of tension and point-of-view shots. He saves his fastest cutting for fights and chases.

Supremacy displays a more mixed style. It contains passages of wobbly, decentered framing, but those exist alongside more traditionally shot scenes—stable framing, with smooth lateral dollying and standard establishing shots. There are some aggressive cuts, as in the crisp emphasis on the sniper at the start of the first pursuit, but on the whole the editing isn’t more jarring than usual these days. We also get, as I mentioned in the earlier entry, some almost willfully obscure over-the-shoulder shots, and occasionally we get the eye-in-the-corner technique that would become more prominent in Ultimatum.

As we’d expect, in Supremacy the bounciest camerawork and choppiest editing are found in the sequences of the most energetic physical action. Most of the surveillance scenes get a little bumpy, while the chases are wilder. The most extreme instance, I think, is the very last chase in the tunnel, when Bourne avenges the death of Marie and slams the sniper’s vehicle into an abutment. As in Identity, Supremacy arrays its technique along a continuum, saving the most visceral techniques for the most brusing action sequences.

So Ultimatum raises the stakes by applying the run-and-gun style more in a more thoroughgoing way. Everything is dialed up a notch. The flashbacks are more expressionistic than those of Supremacy: instead of dark hallucinations we get blinding, bleached-out glimpses of torture and execution, in staggered and smeared stop-frames. The conversation scenes are bumpier and more disjointed; the cat-and-mouse trailings are more disorienting, with jerky zooms and distracted framings; the full-bore action scenes are even more elliptical and defocused. It’s as if the visual texture of Supremacy’s tunnel chase has become the touchstone for the whole movie.

That texture I tried to describe in the earlier entry. Greengrass relies on camerawork, cutting, and sound to convey the visceral impact of action, rather than showing the action itself. The most idiosyncratic choices include framing that drifts away from the subject of the shot, oddly offcenter compositions, and a rate of cutting that masks rather than reveals the overall arc of the action. Some critics have liked the film’s technique, some have hated it, but I think my account stands as a fair account of the destabilizing tactics on the screen, and a likely source of some spectators’ vertigo.

Run-and-gun, with a gun

The style of Ultimatum is a version of what filmmakers call run-and-gun: shot-snatching in a pseudo-documentary manner. This approach has a long history in American film. The bumpy handheld camera, I tried to show in The Way Hollywood Tells It, has been repeatedly rediscovered, and every time it’s declared brand new. I have to admit I’m startled that critics, who probably have seen Body and Soul, A Hard Day’s Night, The War Game, Seven Days in May, or Medium Cool, continue to hail it as an innovation. Today it’s a standard resource for fictional filmmaking, to be used well or badly.

What’s at issue here are the role it plays and the effects it achieves. In Lars von Trier’s The Idiots, I’d argue that handheld work becomes genuinely disorienting because the camera is scanning the scene spontaneously to grab what emerges. But von Trier’s roaming camera yields rather long takes compared to what we find in the Bourne series. Greengrass is practicing what I called, in Film Art and The Way Hollywood Tells It, intensified continuity.

This approach breaks down a scene so that each shot yields one, fairly straightforward piece of information. The hero arrives at the station. Car pulls up; cut to him getting out; cut to him walking in. Traditional rules of continuity—matching screen direction, eyelines, overall positions in the set—are still obeyed, but the dialogue or physical action tends to be pulverized into dozens of shots, each one telling us one simple thing, or simply reiterating what a previous shot has shown. (Oddly, sometimes intensified continuity seems more, rather than less, redundant than traditional continuity cutting.)

Within the intensified continuity approach, we can find considerable variation. I argued that one highly mannered version can be found in Tony Scott’s later work, where the shots are fragmented to near-illegibility and treated as decorative bits. You can find Scott’s signature look tawdry and overwrought, but Liman and Greengrass belong in the same tradition. All three directors rely on telephoto lenses, for example, and they have recourse to well-proven techniques for rendering hallucinatory states of mind, as in these multiple-exposure shots, one from Man on Fire and the second from Bourne Supremacy.

Despite the stylistic differences among the three Bourne films, the basic one-point-per-shot premises remain in place. Here’s a passage from Supremacy. Jason gets off the train in Moscow. We get an establishing shot of the station as the train pulls in, followed by a shot of him getting off, moving left to right. The establishing shot is a smooth craning movement down, but the following shot is a little shaky.

Then we get a blurry shot of him walking, taken with a very long lens. A head-on shot like this traditionally creates a transition allowing the filmmaker to cross the axis of action, letting Jason walk right to left in the next shot.

Cut to closer view: Jason turns his head slightly. Cut to what he sees, in a shaky pov: Cops.

The answering handheld shot shows Jason reacting. As he strides on purposefully, a still closer shot accentuates his eye movement and adds a beat of tension.

Jason turns and goes to the pay phones, followed by the unsteadicam, then turns his head watchfully. A smooth match-on-action cut brings us closer.

But then Jason turns back to his task, and suddenly a passerby blots out the frame.

When the frame clears, a jouncy shot shows Jason’s hands thumbing a phone book. This might be a continuation of the same shot, or the result of a hidden cut. I suggest in The Way Hollywood Tells It that such stratagems are common in intensified continuity: blotting out the frame, flashing strobe lights, and other devices can create a pulsating rhythm akin to that of cutting. Jason turns the pages, and a jump cut shows him on another page.

Cut back to him scanning the pages. He writes down an address, in a very shaky framing.

Cut back again to Jason, and another smooth match-on action brings us back to the slightly fuller framing we’ve seen already. Greengrass shoots with at least two cameras simultaneously, which can facilitate match-cutting like this.

Jason strides toward us, going out of focus. Then a stable long shot pans with him, moving left as before. He leaves the station.

A very simple piece of action has been broken into many shots, some of them restating what we’ve already seen. In the days of classic studio filming, most directors wouldn’t have given us so many shots of Bourne leaving the train platform; a single tracking shot would have done the trick. A single shot could have shown us Jason at the phone, scribbling down the address. As a result, the whole passage is cut faster than in the classic era. The sequence lasts only forty-one seconds but shows sixteen shots. Two to three seconds per shot is a common overall average for an action picture today. The rapid cutting helps create that bustle that Hollywood values in all its genres.

It’s clear, I think, that here the run-and-gun technique is laid over the premises of intensified continuity, letting each shot isolate a bit of narrative information to make sure we understand, even at the risk of reiterating some bits. Jason has arrived in Moscow, he has to be careful because cops are everywhere, now he needs an address, he finds it, he’s writing it down . . . . All of this action is bookended by long shots that pointedly show us the character arriving at the station and leaving it.

I suggested in the earlier entry that in Ultimatum, Greengrass takes more risks than in the previous film. He still assigns one piece of information per shot, but now that piece is sometimes not in focus, or slips out of the center of the frame, or is glimpsed in a brief close-up. The September issue of American Cinematographer, which arrived as I was completing this entry, explains that he encouraged his camera operators to follow their own instincts about focus and composition. (3) Seeing Ultimatum again, I realized that the film can get away with this sideswiping technique by virtue of certain conventions of genre and style.

A telltale clue, like the charred label left over from a car bomb, can be given a fleeting close-up because physical tokens are carrying us from scene to scene throughout the film and we’re on the lookout for them. A sniper racing away out of focus in the distance is a character we’ve seen before, and he’s spotted in frame center before he dodges away. The larger patterning of shots relies on crosscutting or point-of-view alternation (Jason looks/ what he sees/ Jason reacts), and so the framing of any shot can be a little less emphatic. Given intensified continuity’s emphasis on tight close-ups for dialogue, faces can be framed a little loosely, trusting us to pick up on what matters most—changes in facial expression.

In short, because there’s only one thing to see, and it’s rather simple, and it’s the sort of thing we’ve seen before in other films and in this film, it can be whisked past us. This tactic crops up from time to time in Liman’s first installment, when a shot seems to fumble for a moment before surrendering the key piece of data. For example, Jason is striding through the hotel and a wobbly point-of-view shot brings the hotel’s evacuation map only partly into view.

Again, Greengrass takes a visual device that was used occasionally in Identity and extends it through an entire film.

Realism, another word for artifice

Finally, what’s the purpose behind Ultimatum’s thoroughgoing exploitation of run-and-gun? I quoted Greengrass’s claim that it conveys Bourne’s mental states, and some critics have rung variations on this. Of course the cutting is choppy and the framing is uncertain. The guy’s constantly scanning his environment, hypersensitive to tiny stimuli. Besides, he’s lost his memory! Yet the same treatment is applied to scenes in which Bourne isn’t present—notably the scenes in CIA offices. Is Nicky constantly alert? Is Pam suffering from amnesia?

Alternatively, this style is said to be more immersive, putting us in Bourne’s immediate situation. This is a puzzling claim because cinema has done this very successfully for many years, through editing and shot scale and camera movement. Don’t Rear Window and the Odessa Steps sequence in Potemkin and the great racetrack scene in Lubitsch’s Lady Windermere’s Fan thrust us squarely into the space and demand that we follow developing action as a side-participant? I think that defenders need to show more concretely how Greengrass’s technique is “immersive” in some sense that other approaches are not. My guess is that that defense will go back to the handheld camera, distractive framing, and choppy cutting . . . all of which do yield visceral impact. But why should we think that they yield greater immersion?

I think we get a clue by recalling that Supremacy and Ultimatum display the run-and-gun strategy that Greengrass employed in Bloody Sunday and United 93. This approach implies something like this: If several camera operators had been present for these historic events, this is something like the way it would have been recorded. We get a reality reconstructed as if it were recorded by movie cameras. I say cameras because we’re telling a story and need to change our angles constantly; a scene couldn’t approximate a record of the event as experienced by a single participant or eyewitness. In movies, the camera is almost always ubiquitous.

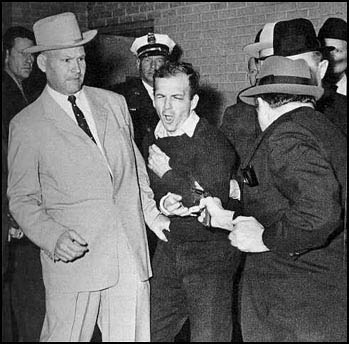

Recall the famous TV footage of Jack Ruby shooting Lee Harvey Oswald. There the lone camera lost some of the most important information by being caught in the crowd’s confusion and swinging wildly away from the action. But in a fiction film, we can’t be permitted to miss key information. So with run-and-gun, the filmmakers in effect cover the action through a troupe of invisible, highly mobile camera operators. That’s to say, another brand of artifice.

In general, the run-and-gun look says, I’m realer than what you normally see. In the DVD supplement to Supremacy, “Keeping It Real,” the producers claimed that they hired Greengrass because they wanted a “documentary feel” for Bourne’s second outing. Greengrass in turn affirms that he wanted to shoot it “like a live event.” And he justifies it, as directors have been justifying camera flourishes and fast cutting for fifty years, as yielding “energy. When you get it, you get magic.” (4)

I’d say that the style achieves visceral disorientation pretty effectively, but some claims for it are exaggerated. So far Greengrass has matched the style to hospitable genres, either historical drama or fast-paced espionage. But isn’t immersion something we should try for in all genres? Wouldn’t High School Musical 2 gain energy and magic if it were shot run-and-gun? If a director tried that, some critics might say that it added intensity and realism, and suggest that it puts us in the minds and hearts of those peppy kids in a way that nothing else could.

I finish this overlong post by invoking Andrew Davis, director of The Fugitive (which back in 1993 gave us fragmentary flashbacks à la Bourne Ultimatum) and the admirable Holes.

When you think about the beginnings: everything was very formal and staged and composed, and then years later people said, “We want it shaky and out of focus and have some kind of honest energy to it.” And then it became a phony energy, because it was like commercials, where they would make everything have a documentary feel when they were selling perfume, you know? (5)

Whether you agree with me or not, I’m glad that The Bourne Ultimatum raised issues of film style that audiences really care about. I’m eager to look more closely at the movie when the DVD is released, but don’t worry–I don’t expect to mount another epic blog entry.However, I do have an item coming up that talks about how we assess what filmmakers say about their movies….

(1) I’m aware that this can be an uncomfortable option, but not as bad as with music. Many years ago I sat in the front row of a John Zorn concert, and I don’t think my ears ever recovered.

(2) Quoted in Rob Feld, “Q & A with David Koepp,” in Josh Friedman and David Koepp, War of the Worlds: The Shooting Script (New York: Newmarket Press, 2005), 136-137.)

(3) See Jon Silberg, “Bourne Again,” American Cinematographer (September 2007), 34-35, available here.

(4) Oddly, though, in the DVD supplement to The Bourne Identity, Frank Oz says that Liman brought “a rough-edged, very energetic” feel to the project, thanks to his indie roots. Interestingly, the energy is attributed to Liman’s abilities as a camera operator, a skill that enables him to shoot things quickly. The same supplement offers a familiar motivation for the film’s purportedly jittery style: the hero is trying to figure out who he is, and so is the viewer. Just as the films revamp a basic plot structure each time, perhaps the producers’ rationales get recycled too.

(5) Quoted in The Director’s Cut: Picturing Hollywood in the 21st Century, ed. Stephan Littger (NY: Continuum, 2006), 96.

PS: On a wholly unrelated subject Kristin answers a question on Roger Ebert’s Movie Answer Man column: What was the first movie?

PPS 5 January 2008: Steven Spielberg weighs in on the Bourne style here, confirming that he’s a more traditional filmmaker. Thanks to Fred Holliday and Brad Schauer for calling my attention to his remarks.